Model Collapse

The Silent Invasion of AI Content

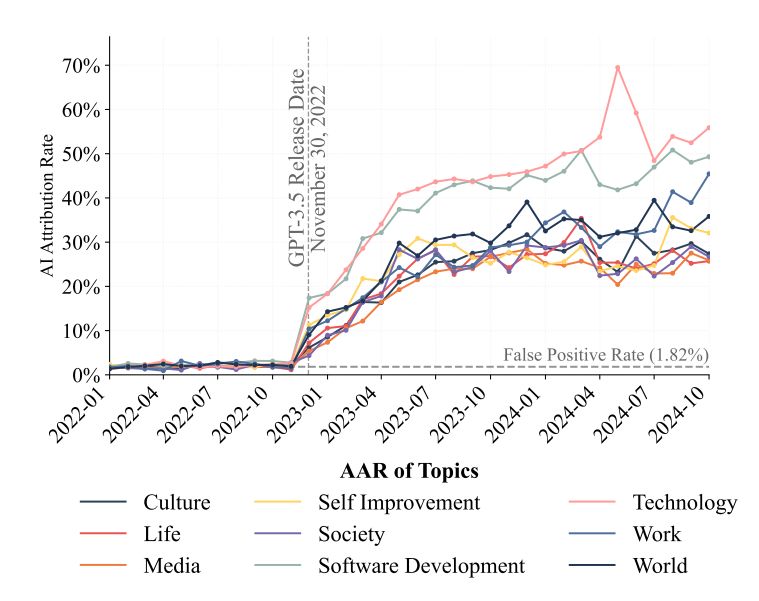

A large portion of content on the Internet is becoming AI-generated. The gradual integration of LLMs (Large Language Models) into our daily lives is democratizing and simplifying their use. Social media platforms have become true gold mines for this type of content: more and more users are relying on ChatGPT or Mistral’s LeChat to write or rewrite their posts before publishing them (maybe this post went through that too? 👀). A striking example is Quora, where AI-generated content rose from 2% in 2022 to ~38% in 2024!

A Systemic Risk: Model Collapse 💥

The problem is that the Internet also indirectly serves as the foundation for the datasets these models are trained on. Yet, a model trained only on so-called "synthetic" data gradually loses information about rarer elements, its responses become more generic, and existing biases are amplified. The model collapses — this is what we call Model Collapse 💥.

What About Tomorrow?

Today, no model is trained exclusively on synthetic data. But if the current trend continues, training datasets will keep growing and will include an increasingly large share of AI-generated content. It will then be crucial to pay close attention to how these datasets are built and to the identification of synthetic content, or we risk poisoning our future models.

📚 Sources

- Forbes - Is AI quietly killing itself – and the Internet? (2024)

- Are We in the AI-Generated Text World Already? Quantifying and Monitoring AIGT on Social Media — Zhen Sun

- AI World Today — AI-Generated Content Surges on Social Media: New Study Reveals Startling Trends (2024)

- AI models collapse when trained on recursively generated data — Ilia Shumailov (2024)

- Position: Will we run out of data? Limits of LLM scaling based on human-generated data — P Villalobos (2024)